Deepfakes represent a very severe and imminent threat to businesses not only within the country but also worldwide. They involve videos, audio, or images generated through AI techniques that are practically indistinguishable from actual ones and, as such, almost anybody can easily be duped by them. For companies, deepfakes pose a problem that goes beyond mere technology; hence, they can end up losing money, suffer tarnished reputations, and even get into legal problems. For instance, imagine a fake video with a CEO saying some rather damaging stuff or an ‘authenticated’ voice instructing the finance team to channel millions of dollars to a ‘reputable’ fraudster. These threats are real and escalating by the day. As such, this article’s discussions revolve around what deepfakes really are, case examples of their existence around us, what industries are at risk because of them, how one practically secures his business from their threats, as well as stays compliant with newly formulated policies.

1. Deepfakes: Some Assembly Needed

Deepfakes are not the superficial kind of fake media but multimedia Frankensteins stitched together using advanced artificial intelligence, most notably Generative Adversarial Networks (GANs) in a two-AI-system collaboration. The generator produces artificial content—video, voice—while the discriminator inspects whether it appears real. These two tussle until such time that the fake is indistinguishable from the real thing.

Deepfakes can also mimic:

- Faces: Putting the face of an individual into the face of another in a video.

- Voices: Replicating the tone and accent of a person to make it sound real.

- Videos: Presenting that someone said or did something that actually they didn’t do.

Unlike older scams such as phishing, deepfakes are more difficult to detect since they appear more lifelike. This makes them a very potent weapon for cybercriminals to use against businesses.

2. Deepfake Threats in the Real World

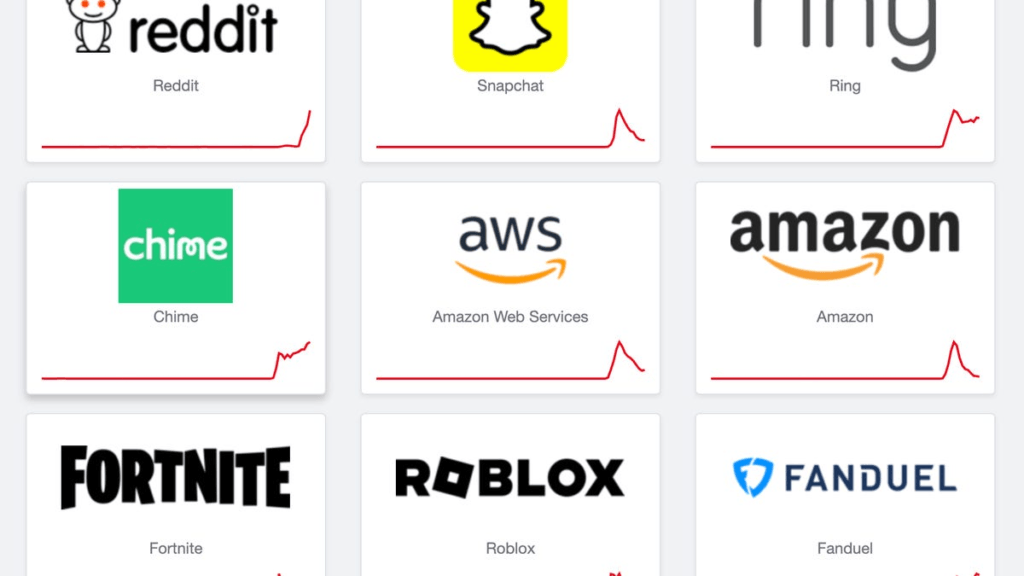

Businesses and society have already faced problems because of deepfakes. Some important examples are as follows:

- 2019 UK Energy Firm Fraud: Criminals had prepared a spoof audio recording of the CEO’s voice speaking in his German accent to dupe a subsidiary into transferring $240,000 to his fraudulent account. The victim felt it wasn’t made with ill intent.

- 2020 Political Deepfakes: Misleading deepfake videos of politicians circulating over the internet are not business-centric; however, serve as perfect examples of how total havoc can be wrecked on credibility.

- In 2023, the year of Fraud-as-a-Service: ‘Deepfake Voice Cloning’ services were being fraudulently advertised on Telegram, allowing even unskilled attackers to develop highly convincing fake voices for executives.

These cases prove that deepfakes are a real and serious cyber threat, not some future concept. Business must take action to protect itself

3. How Deepfakes Affect Different Industries

Deepfakes uniquely affect different industries, and understanding these implications will help companies better prepare:

- Financial Services: Unleashing a tide of contingent voice deep fake approvals on wire transfers or synthetic CEO videos to slip through security checks could file billions in financial losses.

- Media and Communications: Deep fakes might circulate false videos of corporate leaders spewing erroneous information, damaging the Company’s reputation, and the brand’s trust.

- Manufacturing and Tech: Impersonation of research leaders in virtual meetings through deepfakes to pilfer trade secrets, mislead on vendor communications for supply chain issues, or for any other reason.

- Government & Public Sector: Misinformation can be disseminated through forged public statements, inaccurate public speeches, or political jabs at an opponent-all eroding the trust placed by the public.

- Healthcare: Impersonation of doctors’ voices or forged patient records can be potentially disruptive and damaging to patient trust within the healthcare system.

Companies can arm themselves against the most probable threats if they know specifically what those threats are in this way, voice fraud in finance and media deepfakes.

4. Ways to Protect Us from Deepfakes

Protecting a business from deepfakes takes a cocktail of training, technology, and policies. Here are seven such strategies:

- Employee training: Educate your employees, especially in finance, HR, and leadership capacities, to identify signs of deepfakes. Integrate deepfake examples into your phishing training and advise verification of any uncharacteristic requests.

- Verification processes: Provide multiple ways to verify processes such as emails or text messages for actions of a sensitive nature and use multi-factor authentication (MFA), dual approvals for hefty transactions.

- Detection of Deepfakes and Deepfake ‘Threat Intelligence’: Other widgets that can help include Microsoft Video Authenticator, Sensity.ai, etc. However, such tools are imperfect since they cannot detect advanced deepfakes. Additionally, advanced tools also fail in determining real content as fake.

- Control over Digital Assets: Control the public release of video or voice images of your executives; apply a digital signature or cryptographic seal on your authentic video to prove its genuineness.

- Action AI Watermarking: Implement industry standards such as C2PA, which are backed by Adobe and Microsoft, to implant invisible marks into media, so as to determine whether the content is genuine or not.

- Threat Monitoring: Keep an eye on social media, the news, even the dark web, for any signs of forged content about your company or your leaders. Leverage threat intelligence to catch risks as early as possible.

- Establish a deepfake response plan. This may include, among others, steps to verify alleged fake content, steps to communicate with the public, and legal steps such as filing takedown requests.

These create layers of protection hence making it harder for deepfakes to cause real harm

5. Compliance with Regulations

Deepfakes aren’t just a threat to businesses; they also pose legal and compliance problems. Various Global Standards that Assist in Managing these Risks:

- India’s DPDP Act (2023): This law relates to the protection of personal data, including such information which constitutes an individual’s voice or facial attributes in whatever form relating to the use and disclosure and transfer in order to prevent misuse concerning deepfake and for punitive measures

- U.S. AI Executive Order (2023): Such provision encourages transparency in the applications of AI by embedding watermarks in the media to detect deepfakes. Private companies, therefore, must observe such rules to maintain conformity

- SOC 2 Standards: These guarantee that service providers adequately protect data and communications from any deepfake-related risks. A validation that such standards are met by associates of a company is to be undertaken by companies.

- ISO/IEC 27001: Such risks and the need to guard against false communications must be included in the world security standard for businesses.

- NIST Cybersecurity Framework: This helps organizations identify, protect against, detect, respond, and recover from threats regarding deepfakes.

Adhering to these standards helps companies not only remain compliant but also create credibility with their customers and partners by indicating that they take deepfake risks seriously.

6. Why the Timing of This Matters

Deepfakes are an emerging danger that can cost an organization dearly in financial terms as well as in trust and reputation. Following past incidents, understanding the risks specific to the sector, and having a good defense in depth can put organizations ahead. The use of such technologies as watermarks and the observance of existing world regulations are some of the ways that can help prevent the spread of false media. Importantly, it builds digital trust – a crucial attribute in a world where it’s ‘really’ hard to tell.

Conclusion

Deepfakes present an actual and increasing threat for businesses—from bogus CEO videos to synthetic voice instructions. To defend themselves, firms need to understand their working, learn from actual cases, and apply feasible defenses such as training, verification, and detection tools. It also discusses adhering to a global standard such as India’s DPDP Act or the U.S. AI Executive Order with compliance to help build trust. Bottom line: with financial pockets and reputations on the line and a customer base that’s learned not to believe its eyes, action in the present is compulsory.